9.3. Moments#

Moments are numerical values that are commonly used to characterize the distribution of a random variable. They are expected values of powers of the random variable. The most common moments are the mean, which is the first moment, and the variance, which is the second central moment.

In this section of Foundations of Data Science with Python, you will learn:

the definition of moments and central moments,

how to interpret moments as integrals that applying weighting functions to different parts of the probability density,

the definition of variance and standard deviation for random variables,

why variance measures the “spread” of a distribution,

how to use SymPy to compute means and variances of continuous random variables, and

important properties of variance that are used in later sections of the book.

9.3.1. Terminology and Properties Review#

Use the flashcards below to help you review the terminology introduced in this chapter. \(~~~~ ~~~~ ~~~~\)

9.3.2. Code and Proof from Section 9.3#

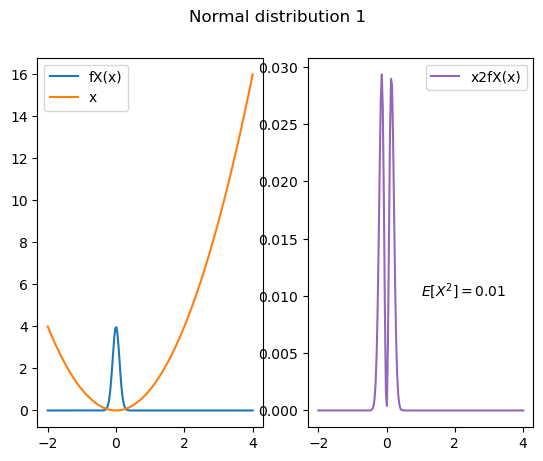

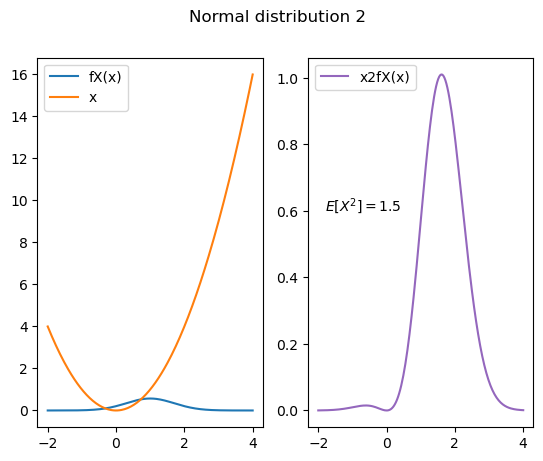

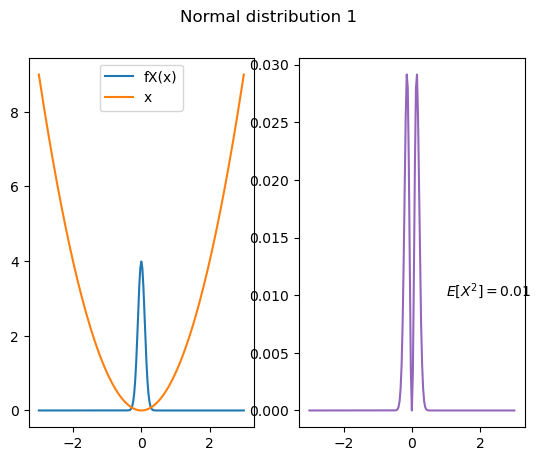

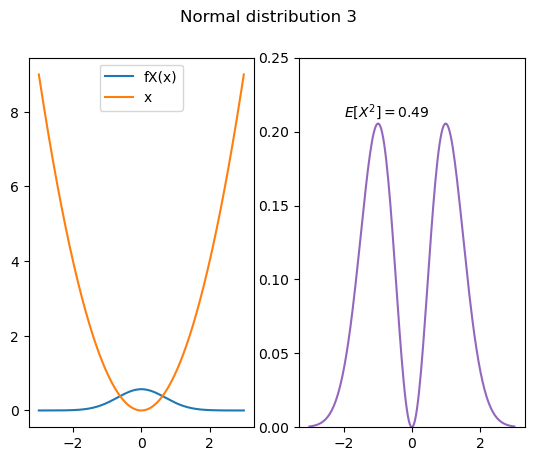

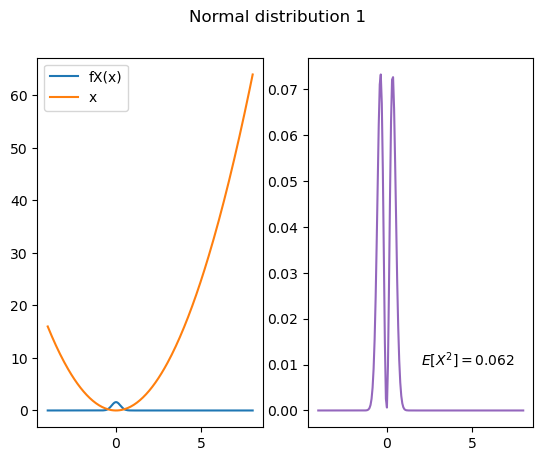

Code for Fig 9.6

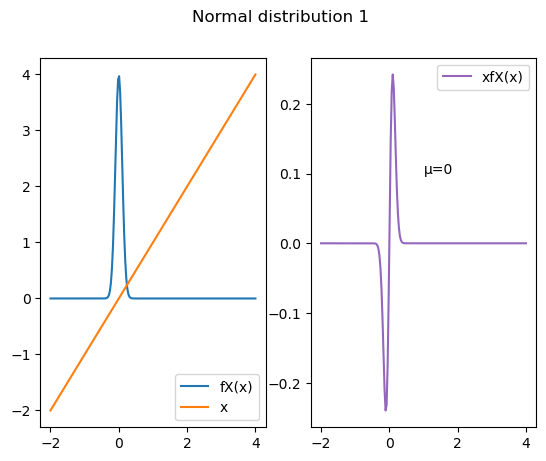

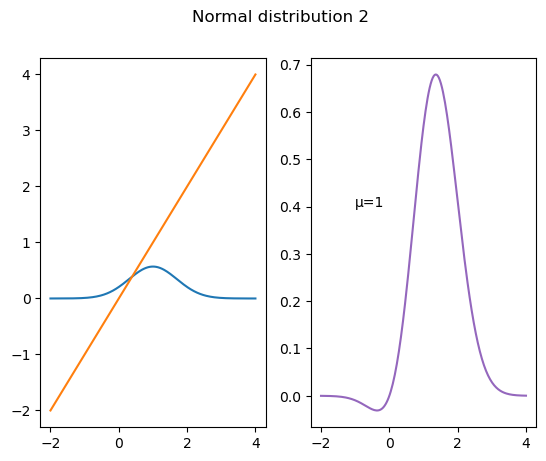

Proof that the mean of a Normal random variable is the parameter \(\mu\)

Now we add and subtract a term,

The first integral is 0 because of odd symmetry around \(\mu\). This may be confusing to some who are used to only odd symmetry around 0, so let’s do the change of variable \(u = x-\mu\). With this change of variable, the region of integration is still \((-\infty, \infty)\):

In addition to the change of variable, I pulled the constant term \(\mu\) outside the second integral. Then the first integral is 0 because of odd symmetry around 0. The second integral is 1 because it is the integral of the Normal density function. So, \(E[X] = \mu\).

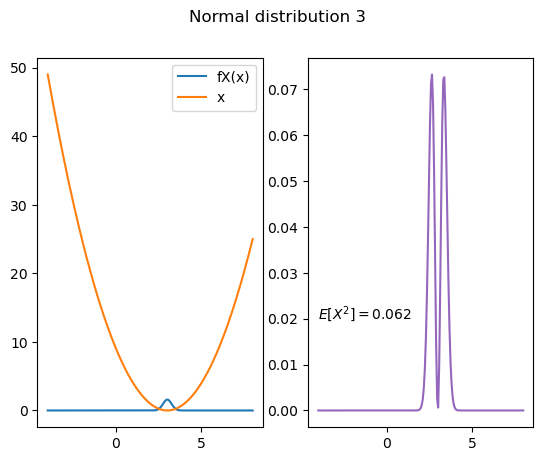

Code for Figure 9.7

Code for Fig. 9.8

Code fog Fig. 9.9

Code for Fig. 9.10

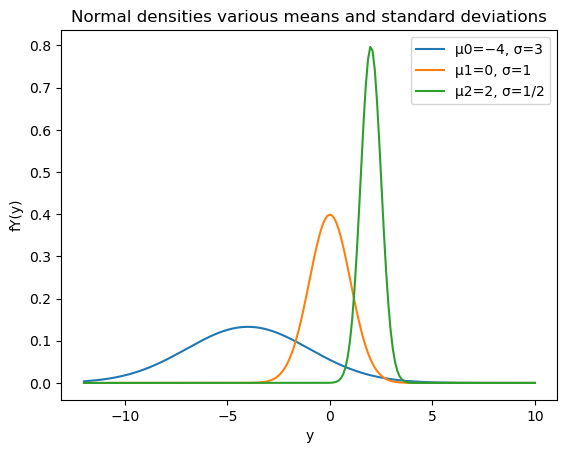

Y0 = stats.norm(loc = -4, scale =3 )

Y1 = stats.norm(loc = 0, scale = 1)

Y2 = stats.norm(loc = 2, scale = 1/2)

y=np.linspace(-12,10, 201)

plt.plot(y, Y0.pdf(y),

label = 'μ0=−4, σ=3' )

plt.plot(y, Y1.pdf(y),

label = 'μ1=0, σ=1' )

plt.plot(y, Y2.pdf(y),

label = 'μ2=2, σ=1/2' )

plt.title('Normal densities various means and standard deviations')

plt.xlabel('y')

plt.ylabel('fY(y)')

plt.legend();